|

|

2008年1月14日

不同的平台,内存模型是不一样的,但是jvm的内存模型规范是统一的。其实java的多线程并发问题最终都会反映在java的内存模型上,所谓线程安全无 非是要控制多个线程对某个资源的有序访问或修改。总结java的内存模型,要解决两个主要的问题:可见性和有序性。我们都知道计算机有高速缓存的存在,处 理器并不是每次处理数据都是取内存的。JVM定义了自己的内存模型,屏蔽了底层平台内存管理细节,对于java开发人员,要清楚在jvm内存模型的基础 上,如果解决多线程的可见性和有序性。

那么,何谓可见性? 多个线程之间是不能互相传递数据通信的,它们之间的沟通只能通过共享变量来进行。Java内存模型(JMM)规定了jvm有主内存,主内存是多个线程共享 的。当new一个对象的时候,也是被分配在主内存中,每个线程都有自己的工作内存,工作内存存储了主存的某些对象的副本,当然线程的工作内存大小是有限制 的。当线程操作某个对象时,执行顺序如下:

(1) 从主存复制变量到当前工作内存 (read and load)

(2) 执行代码,改变共享变量值 (use and assign)

(3) 用工作内存数据刷新主存相关内容 (store and write) JVM规范定义了线程对主存的操作指 令:read,load,use,assign,store,write。当一个共享变量在多个线程的工作内存中都有副本时,如果一个线程修改了这个共享 变量,那么其他线程应该能够看到这个被修改后的值,这就是多线程的可见性问题。

那么,什么是有序性呢 ?线程在引用变量时不能直接从主内存中引用,如果线程工作内存中没有该变量,则会从主内存中拷贝一个副本到工作内存中,这个过程为read-load,完 成后线程会引用该副本。当同一线程再度引用该字段时,有可能重新从主存中获取变量副本(read-load-use),也有可能直接引用原来的副本 (use),也就是说 read,load,use顺序可以由JVM实现系统决定。

线程不能直接为主存中中字段赋值,它会将值指定给工作内存中的变量副本(assign),完成后这个变量副本会同步到主存储区(store- write),至于何时同步过去,根据JVM实现系统决定.有该字段,则会从主内存中将该字段赋值到工作内存中,这个过程为read-load,完成后线 程会引用该变量副本,当同一线程多次重复对字段赋值时,比如:

Java代码

for(int i=0;i<10;i++)

a++;

线程有可能只对工作内存中的副本进行赋值,只到最后一次赋值后才同步到主存储区,所以assign,store,weite顺序可以由JVM实现系统决 定。假设有一个共享变量x,线程a执行x=x+1。从上面的描述中可以知道x=x+1并不是一个原子操作,它的执行过程如下:

1 从主存中读取变量x副本到工作内存

2 给x加1

3 将x加1后的值写回主 存

如果另外一个线程b执行x=x-1,执行过程如下:

1 从主存中读取变量x副本到工作内存

2 给x减1

3 将x减1后的值写回主存

那么显然,最终的x的值是不可靠的。假设x现在为10,线程a加1,线程b减1,从表面上看,似乎最终x还是为10,但是多线程情况下会有这种情况发生:

1:线程a从主存读取x副本到工作内存,工作内存中x值为10

2:线程b从主存读取x副本到工作内存,工作内存中x值为10

3:线程a将工作内存中x加1,工作内存中x值为11

4:线程a将x提交主存中,主存中x为11

5:线程b将工作内存中x值减1,工作内存中x值为9

6:线程b将x提交到中主存中,主存中x为 jvm的内存模型之eden区所谓线程的“工作内存”到底是个什么东西?有的人认为是线程的栈,其实这种理解是不正确的。看看JLS(java语言规范)对线程工作 内存的描述,线程的working memory只是cpu的寄存器和高速缓存的抽象描述。 可能 很多人都觉得莫名其妙,说JVM的内存模型,怎么会扯到cpu上去呢?在此,我认为很有必要阐述下,免 得很多人看得不明不白的。先抛开java虚拟机不谈,我们都知道,现在的计算机,cpu在计算的时候,并不总是从内存读取数据,它的数据读取顺序优先级 是:寄存器-高速缓存-内存。线程耗费的是CPU,线程计算的时候,原始的数据来自内存,在计算过程中,有些数据可能被频繁读取,这些数据被存储在寄存器 和高速缓存中,当线程计算完后,这些缓存的数据在适当的时候应该写回内存。当个多个线程同时读写某个内存数据时,就会产生多线程并发问题,涉及到三个特 性:原子性,有序性,可见性。在《线程安全总结》这篇文章中,为了理解方便,我把原子性和有序性统一叫做“多线程执行有序性”。支持多线程的平台都会面临 这种问题,运行在多线程平台上支持多线程的语言应该提供解决该问题的方案。 synchronized, volatile,锁机制(如同步块,就绪队 列,阻塞队列)等等。这些方案只是语法层面的,但我们要从本质上去理解它,不能仅仅知道一个 synchronized 可以保证同步就完了。 在这里我说的是jvm的内存模型,是动态的,面向多线程并发的,沿袭JSL的“working memory”的说法,只是不想牵扯到太多底层细节,因为 《线程安全总结》这篇文章意在说明怎样从语法层面去理解java的线程同步,知道各个关键字的使用场 景。 说说JVM的eden区吧。JVM的内存,被划分了很多的区域: 1.程序计数器

每一个Java线程都有一个程序计数器来用于保存程序执行到当前方法的哪一个指令。

2.线程栈

线程的每个方法被执行的时候,都会同时创建一个帧(Frame)用于存储本地变量表、操作栈、动态链接、方法出入口等信息。每一个方法的调用至完成,就意味着一个帧在VM栈中的入栈至出栈的过程。如果线程请求的栈深度大于虚拟机所允许的深度,将抛出StackOverflowError异常;如果VM栈可以动态扩展(VM Spec中允许固定长度的VM栈),当扩展时无法申请到足够内存则抛出OutOfMemoryError异常。

3.本地方法栈

4.堆

每个线程的栈都是该线程私有的,堆则是所有线程共享的。当我们new一个对象时,该对象就被分配到了堆中。但是堆,并不是一个简单的概念,堆区又划分了很多区域,为什么堆划分成这么多区域,这是为了JVM的内存垃圾收集,似乎越扯越远了,扯到垃圾收集了,现在的jvm的gc都是按代收集,堆区大致被分为三大块:新生代,旧生代,持久代(虚拟的);新生代又分为eden区,s0区,s1区。新建一个对象时,基本小的对象,生命周期短的对象都会放在新生代的eden区中,eden区满时,有一个小范围的gc(minor gc),整个新生代满时,会有一个大范围的gc(major gc),将新生代里的部分对象转到旧生代里。

5.方法区

其实就是永久代(Permanent Generation),方法区中存放了每个Class的结构信息,包括常量池、字段描述、方法描述等等。VM Space描述中对这个区域的限制非常宽松,除了和Java堆一样不需要连续的内存,也可以选择固定大小或者可扩展外,甚至可以选择不实现垃圾收集。相对来说,垃圾收集行为在这个区域是相对比较少发生的,但并不是某些描述那样永久代不会发生GC(至 少对当前主流的商业JVM实现来说是如此),这里的GC主要是对常量池的回收和对类的卸载,虽然回收的“成绩”一般也比较差强人意,尤其是类卸载,条件相当苛刻。

6.常量池

Class文件中除了有类的版本、字段、方法、接口等描述等信息外,还有一项信息是常量表(constant_pool table),用于存放编译期已可知的常量,这部分内容将在类加载后进入方法区(永久代)存放。但是Java语言并不要求常量一定只有编译期预置入Class的常量表的内容才能进入方法区常量池,运行期间也可将新内容放入常量池(最典型的String.intern()方法)。

ArrayList 关于 Java中的transient,volatile和strictfp关键字 http://www.iteye.com/topic/52957 (1), ArrayList底层使用Object数据实现, private transient Object[] elementData;且在使用不带参数的方式实例化时,生成数组默认的长度是10。 (2), add方法实现 public boolean add(E e) {

//ensureCapacityInternal判断添加新元素是否需要重新扩大数组的长度,需要则扩否则不 ensureCapacityInternal(size + 1); // 此为JDK7调用的方法 JDK5里面使用的ensureCapacity方法 elementData[size++] = e; //把对象插入数组,同时把数组存储的数据长度size加1 return true; } JDK 7中 ensureCapacityInternal实现 private void ensureCapacityInternal(int minCapacity) { modCount++;修改次数 // overflow-conscious code if (minCapacity - elementData.length > 0) grow(minCapacity);//如果需要扩大数组长度 } /** * The maximum size of array to allocate. --申请新数组最大长度 * Some VMs reserve some header words in an array. * Attempts to allocate larger arrays may result in * OutOfMemoryError: Requested array size exceeds VM limit --如果申请的数组占用的内心大于JVM的限制抛出异常 */ private static final int MAX_ARRAY_SIZE = Integer.MAX_VALUE - 8;//为什么减去8看注释第2行 /** * Increases the capacity to ensure that it can hold at least the * number of elements specified by the minimum capacity argument. * * @param minCapacity the desired minimum capacity */ private void grow(int minCapacity) { // overflow-conscious code int oldCapacity = elementData.length; int newCapacity = oldCapacity + (oldCapacity >> 1); //新申请的长度为old的3/2倍同时使用位移运算更高效,JDK5中: (oldCapacity *3)/2+1 if (newCapacity - minCapacity < 0) newCapacity = minCapacity; if (newCapacity - MAX_ARRAY_SIZE > 0) //你懂的 newCapacity = hugeCapacity(minCapacity); // minCapacity is usually close to size, so this is a win: elementData = Arrays.copyOf(elementData, newCapacity); } //可以申请的最大长度 private static int hugeCapacity(int minCapacity) { if (minCapacity < 0) // overflow throw new OutOfMemoryError(); return (minCapacity > MAX_ARRAY_SIZE) ? Integer.MAX_VALUE : MAX_ARRAY_SIZE; }

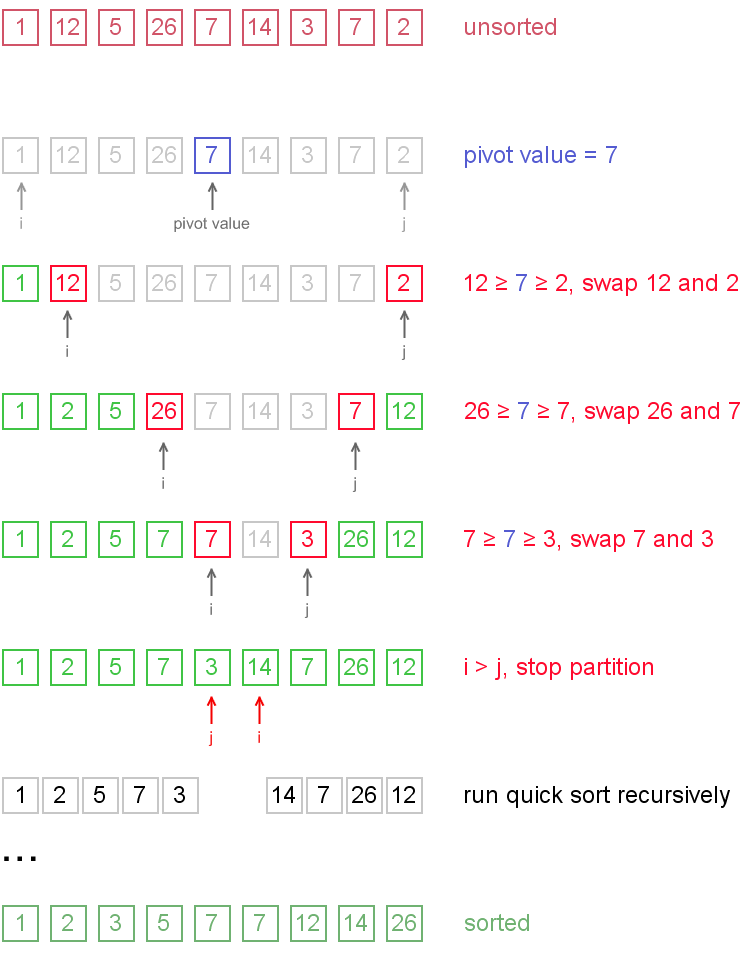

Quicksort

Quicksort is a fast sorting algorithm, which is used not only for educational purposes, but widely applied in practice. On the average, it has O(n log n) complexity, making quicksort suitable for sorting big data volumes. The idea of the algorithm is quite simple and once you realize it, you can write quicksort as fast as bubble sort.

Algorithm

The divide-and-conquer strategy is used in quicksort. Below the recursion step is described:

- Choose a pivot value. We take the value of the middle element as pivot value, but it can be any value, which is in range of sorted values, even if it doesn't present in the array.

- Partition. Rearrange elements in such a way, that all elements which are lesser than the pivot go to the left part of the array and all elements greater than the pivot, go to the right part of the array. Values equal to the pivot can stay in any part of the array. Notice, that array may be divided in non-equal parts.

- Sort both parts. Apply quicksort algorithm recursively to the left and the right parts.

Partition algorithm in detail

There are two indices i and j and at the very beginning of the partition algorithm i points to the first element in the array andj points to the last one. Then algorithm moves i forward, until an element with value greater or equal to the pivot is found. Index j is moved backward, until an element with value lesser or equal to the pivot is found. If i ≤ j then they are swapped and i steps to the next position (i + 1), j steps to the previous one (j - 1). Algorithm stops, when i becomes greater than j.

After partition, all values before i-th element are less or equal than the pivot and all values after j-th element are greater or equal to the pivot.

Example. Sort {1, 12, 5, 26, 7, 14, 3, 7, 2} using quicksort.

Notice, that we show here only the first recursion step, in order not to make example too long. But, in fact, {1, 2, 5, 7, 3} and {14, 7, 26, 12} are sorted then recursively.

Why does it work?

On the partition step algorithm divides the array into two parts and every element a from the left part is less or equal than every element b from the right part. Also a and b satisfy a ≤ pivot ≤ b inequality. After completion of the recursion calls both of the parts become sorted and, taking into account arguments stated above, the whole array is sorted.

Complexity analysis

On the average quicksort has O(n log n) complexity, but strong proof of this fact is not trivial and not presented here. Still, you can find the proof in [1]. In worst case, quicksort runs O(n2) time, but on the most "practical" data it works just fine and outperforms other O(n log n) sorting algorithms.

Code snippets

Java

int partition(int arr[], int left, int right)

{

int i = left;

int j = right;

int temp;

int pivot = arr[(left+right)>>1];

while(i<=j){

while(arr[i]>=pivot){

i++;

}

while(arr[j]<=pivot){

j--;

}

if(i<=j){

temp = arr[i];

arr[i] = arr[j];

arr[j] = temp;

i++;

j--;

}

}

return i

}

void quickSort(int arr[], int left, int right) {

int index = partition(arr, left, right);

if(left<index-1){

quickSort(arr,left,index-1);

}

if(index<right){

quickSort(arr,index,right);

}

}

python

def quickSort(L,left,right) {

i = left

j = right

if right-left <=1:

return L

pivot = L[(left + right) >>1];

/* partition */

while (i <= j) {

while (L[i] < pivot)

i++;

while (L[j] > pivot)

j--;

if (i <= j) {

L[i],L[j] = L[j],L[i]

i++;

j--;

}

};

/* recursion */

if (left < j)

quickSort(L, left, j);

if (i < right)

quickSort(L, i, right);

}

|

Insertion Sort

Insertion sort belongs to the O(n2) sorting algorithms. Unlike many sorting algorithms with quadratic complexity, it is actually applied in practice for sorting small arrays of data. For instance, it is used to improve quicksort routine. Some sources notice, that people use same algorithm ordering items, for example, hand of cards.

Algorithm

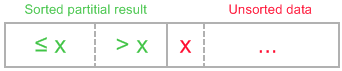

Insertion sort algorithm somewhat resembles selection sort. Array is imaginary divided into two parts - sorted one andunsorted one. At the beginning, sorted part contains first element of the array and unsorted one contains the rest. At every step, algorithm takes first element in the unsorted part and inserts it to the right place of the sorted one. Whenunsorted part becomes empty, algorithm stops. Sketchy, insertion sort algorithm step looks like this:

becomes

The idea of the sketch was originaly posted here.

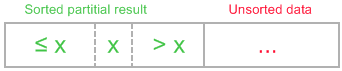

Let us see an example of insertion sort routine to make the idea of algorithm clearer.

Example. Sort {7, -5, 2, 16, 4} using insertion sort.

The ideas of insertion

The main operation of the algorithm is insertion. The task is to insert a value into the sorted part of the array. Let us see the variants of how we can do it.

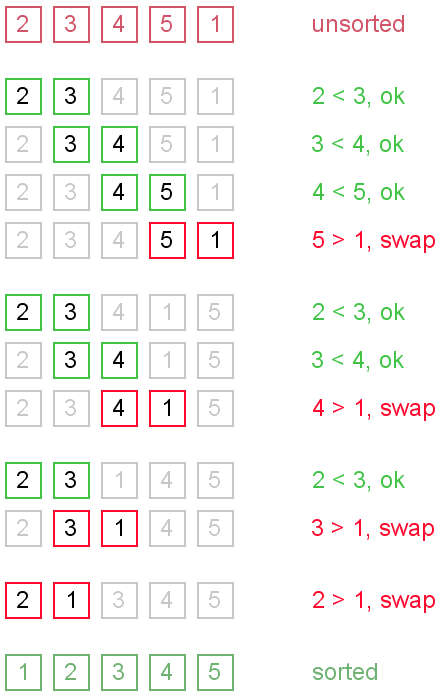

"Sifting down" using swaps

The simplest way to insert next element into the sorted part is to sift it down, until it occupies correct position. Initially the element stays right after the sorted part. At each step algorithm compares the element with one before it and, if they stay in reversed order, swap them. Let us see an illustration.

This approach writes sifted element to temporary position many times. Next implementation eliminates those unnecessary writes.

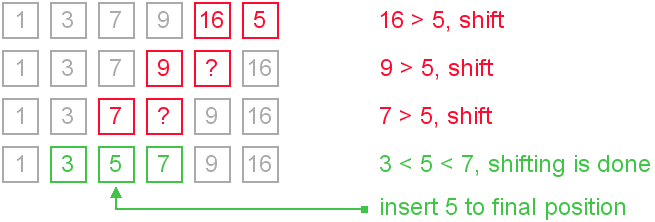

Shifting instead of swapping

We can modify previous algorithm, so it will write sifted element only to the final correct position. Let us see an illustration.

It is the most commonly used modification of the insertion sort.

Using binary search

It is reasonable to use binary search algorithm to find a proper place for insertion. This variant of the insertion sort is calledbinary insertion sort. After position for insertion is found, algorithm shifts the part of the array and inserts the element. This version has lower number of comparisons, but overall average complexity remains O(n2). From a practical point of view this improvement is not very important, because insertion sort is used on quite small data sets.

Complexity analysis

Insertion sort's overall complexity is O(n2) on average, regardless of the method of insertion. On the almost sorted arrays insertion sort shows better performance, up to O(n) in case of applying insertion sort to a sorted array. Number of writes is O(n2) on average, but number of comparisons may vary depending on the insertion algorithm. It is O(n2) when shifting or swapping methods are used and O(n log n) for binary insertion sort.

From the point of view of practical application, an average complexity of the insertion sort is not so important. As it was mentioned above, insertion sort is applied to quite small data sets (from 8 to 12 elements). Therefore, first of all, a "practical performance" should be considered. In practice insertion sort outperforms most of the quadratic sorting algorithms, like selection sort or bubble sort.

Insertion sort properties

- adaptive (performance adapts to the initial order of elements);

- stable (insertion sort retains relative order of the same elements);

- in-place (requires constant amount of additional space);

- online (new elements can be added during the sort).

Code snippets

We show the idea of insertion with shifts in Java implementation and the idea of insertion using python code snippet.

Java implementation

void insertionSort(int[] arr) {

int i,j,newValue;

for(i=1;i<arr.length;i++){

newValue = arr[i];

j=i;

while(j>0&&arr[j-1]>newValue){

arr[j] = arr[j-1];

j--;

}

arr[j] = newValue;

}

Python implementation

void insertionSort(L) {

for i in range(l,len(L)):

j = i

newValue = L[i]

while j > 0 and L[j - 1] >L[j]:

L[j] = L[j - 1]

j = j-1

}

L[j] = newValue

}

}

|

Binary search algorithm

Generally, to find a value in unsorted array, we should look through elements of an array one by one, until searched value is found. In case of searched value is absent from array, we go through all elements. In average, complexity of such an algorithm is proportional to the length of the array.

Situation changes significantly, when array is sorted. If we know it, random access capability can be utilized very efficientlyto find searched value quick. Cost of searching algorithm reduces to binary logarithm of the array length. For reference, log2(1 000 000) ≈ 20. It means, that in worst case, algorithm makes 20 steps to find a value in sorted array of a million elements or to say, that it doesn't present it the array.

Algorithm

Algorithm is quite simple. It can be done either recursively or iteratively:

- get the middle element;

- if the middle element equals to the searched value, the algorithm stops;

- otherwise, two cases are possible:

- searched value is less, than the middle element. In this case, go to the step 1 for the part of the array, before middle element.

- searched value is greater, than the middle element. In this case, go to the step 1 for the part of the array, after middle element.

Now we should define, when iterations should stop. First case is when searched element is found. Second one is when subarray has no elements. In this case, we can conclude, that searched value doesn't present in the array.

Examples

Example 1. Find 6 in {-1, 5, 6, 18, 19, 25, 46, 78, 102, 114}.

Step 1 (middle element is 19 > 6): -1 5 6 18 19 25 46 78 102 114

Step 2 (middle element is 5 < 6): -1 5 6 18 19 25 46 78 102 114

Step 3 (middle element is 6 == 6): -1 5 6 18 19 25 46 78 102 114

Example 2. Find 103 in {-1, 5, 6, 18, 19, 25, 46, 78, 102, 114}.

Step 1 (middle element is 19 < 103): -1 5 6 18 19 25 46 78 102 114

Step 2 (middle element is 78 < 103): -1 5 6 18 19 25 46 78 102 114

Step 3 (middle element is 102 < 103): -1 5 6 18 19 25 46 78 102 114

Step 4 (middle element is 114 > 103): -1 5 6 18 19 25 46 78 102 114

Step 5 (searched value is absent): -1 5 6 18 19 25 46 78 102 114

Complexity analysis

Huge advantage of this algorithm is that it's complexity depends on the array size logarithmically in worst case. In practice it means, that algorithm will do at most log2(n) iterations, which is a very small number even for big arrays. It can be proved very easily. Indeed, on every step the size of the searched part is reduced by half. Algorithm stops, when there are no elements to search in. Therefore, solving following inequality in whole numbers:

n / 2iterations > 0

resulting in

iterations <= log2(n).

It means, that binary search algorithm time complexity is O(log2(n)).

Code snippets.

You can see recursive solution for Java and iterative for python below.

Java

int binarySearch(int[] array, int value, int left, int right) {

if (left > right)

return -1;

int middle = left + (right-left) / 2;

if (array[middle] == value)

return middle;

if (array[middle] > value)

return binarySearch(array, value, left, middle - 1);

else

return binarySearch(array, value, middle + 1, right);

}

Python

def biSearch(L,e,first,last):

if last - first < 2: return L[first] == e or L[last] == e

mid = first + (last-first)/2

if L[mid] ==e: return True

if L[mid]> e :

return biSearch(L,e,first,mid-1)

return biSearch(L,e,mid+1,last)

|

Merge sort is an O(n log n) comparison-based sorting algorithm. Most implementations produce a stable sort, meaning that the implementation preserves the input order of equal elements in the sorted output. It is a divide and conquer algorithm. Merge sort was invented by John von Neumann in 1945. A detailed description and analysis of bottom-up mergesort appeared in a report byGoldstine and Neumann as early as 1948

divide and conquer algorithm: 1, split the problem into several subproblem of the same type. 2,solove independetly. 3 combine those solutions

Python Implement

def mergeSort(L):

if len(L) < 2 :

return L

middle = len(L)/2

left = mergeSort(L[:mddle])

right = mergeSort(L[middle:])

together = merge(left,right)

return together

Algorithm to merge sorted arrays

In the article we present an algorithm for merging two sorted arrays. One can learn how to operate with several arrays and master read/write indices. Also, the algorithm has certain applications in practice, for instance in merge sort.

Merge algorithm

Assume, that both arrays are sorted in ascending order and we want resulting array to maintain the same order. Algorithm to merge two arrays A[0..m-1] and B[0..n-1] into an array C[0..m+n-1] is as following:

- Introduce read-indices i, j to traverse arrays A and B, accordingly. Introduce write-index k to store position of the first free cell in the resulting array. By default i = j = k = 0.

- At each step: if both indices are in range (i < m and j < n), choose minimum of (A[i], B[j]) and write it toC[k]. Otherwise go to step 4.

- Increase k and index of the array, algorithm located minimal value at, by one. Repeat step 2.

- Copy the rest values from the array, which index is still in range, to the resulting array.

Enhancements

Algorithm could be enhanced in many ways. For instance, it is reasonable to check, if A[m - 1] < B[0] orB[n - 1] < A[0]. In any of those cases, there is no need to do more comparisons. Algorithm could just copy source arrays in the resulting one in the right order. More complicated enhancements may include searching for interleaving parts and run merge algorithm for them only. It could save up much time, when sizes of merged arrays differ in scores of times.

Complexity analysis

Merge algorithm's time complexity is O(n + m). Additionally, it requires O(n + m) additional space to store resulting array.

Code snippets

Java implementation

// size of C array must be equal or greater than

// sum of A and B arrays' sizes

public void merge(int[] A, int[] B, int[] C) {

int i,j,k ;

i = 0;

j=0;

k=0;

m = A.length;

n = B.length;

while(i < m && j < n){

if(A[i]<= B[j]){

C[k] = A[i];

i++;

}else{

C[k] = B[j];

j++;

}

k++;

while(i<m){

C[k] = A[i]

i++;

k++;

}

while(j<n){

C[k] = B[j]

j++;

k++;

}

Python implementation

def merege(left,right):

result = []

i,j = 0

while i< len(left) and j < len(right):

if left[i]<= right[j]:

result.append(left[i])

i = i + 1

else:

result.append(right[j])

j = j + 1

while i< len(left):

result.append(left[i])

i = i + 1

while j< len(right):

result.append(right[j])

j = j + 1

return result

MergSort:

import operator

def mergeSort(L, compare = operator.lt):

if len(L) < 2:

return L[:]

else:

middle = int(len(L)/2)

left = mergeSort(L[:middle], compare)

right= mergeSort(L[middle:], compare)

return merge(left, right, compare)

def merge(left, right, compare):

result = []

i, j = 0, 0

while i < len(left) and j < len(right):

if compare(left[i], right[j]):

result.append(left[i])

i += 1

else:

result.append(right[j])

j += 1

while i < len(left):

result.append(left[i])

i += 1

while j < len(right):

result.append(right[j])

j += 1

return result

|

Selection Sort

Selection sort is one of the O(n2) sorting algorithms, which makes it quite inefficient for sorting large data volumes. Selection sort is notable for its programming simplicity and it can over perform other sorts in certain situations (see complexity analysis for more details).

Algorithm

The idea of algorithm is quite simple. Array is imaginary divided into two parts - sorted one and unsorted one. At the beginning, sorted part is empty, while unsorted one contains whole array. At every step, algorithm finds minimal element in the unsorted part and adds it to the end of the sorted one. When unsorted part becomes empty, algorithmstops.

When algorithm sorts an array, it swaps first element of unsorted part with minimal element and then it is included to the sorted part. This implementation of selection sort in not stable. In case of linked list is sorted, and, instead of swaps, minimal element is linked to the unsorted part, selection sort is stable.

Let us see an example of sorting an array to make the idea of selection sort clearer.

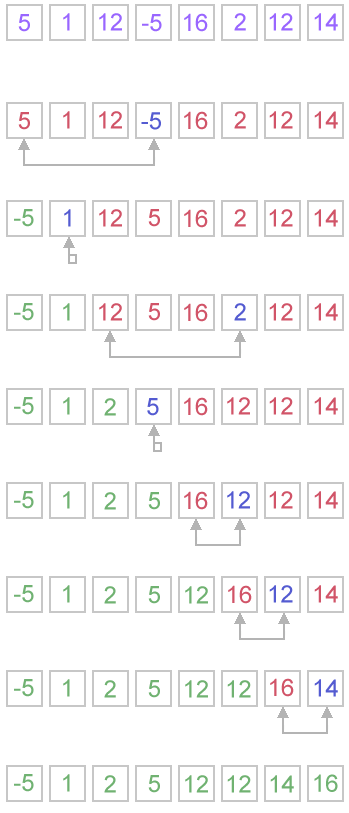

Example. Sort {5, 1, 12, -5, 16, 2, 12, 14} using selection sort.

Complexity analysis

Selection sort stops, when unsorted part becomes empty. As we know, on every step number of unsorted elements decreased by one. Therefore, selection sort makes n steps (n is number of elements in array) of outer loop, before stop. Every step of outer loop requires finding minimum in unsorted part. Summing up, n + (n - 1) + (n - 2) + ... + 1, results in O(n2) number of comparisons. Number of swaps may vary from zero (in case of sorted array) to n - 1 (in case array was sorted in reversed order), which results in O(n) number of swaps. Overall algorithm complexity is O(n2).

Fact, that selection sort requires n - 1 number of swaps at most, makes it very efficient in situations, when write operation is significantly more expensive, than read operation.

Code snippets

Java

public void selectionSort(int[] arr) {

int i, j, minIndex, tmp;

int n = arr.length;

for (i = 0; i < n - 1; i++) {

minIndex = i;

for (j = i + 1; j < n; j++)

if (arr[j] < arr[minIndex])

minIndex = j;

if (minIndex != i) {

tmp = arr[i];

arr[i] = arr[minIndex];

arr[minIndex] = tmp;

}

}

}

Python

for i in range(len(L)-1): minIndex = i minValue = L[i] j = i + 1 while j< len(L): if minValue > L[j]: minIndex = j minValue = L[j] j += 1 if minIndex != i: temp = L[i] L[i] = L[minIndex] L[minIndex] = temp

|

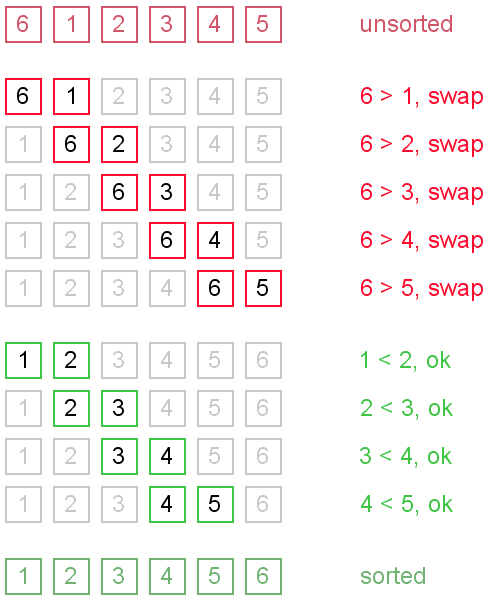

Bubble Sort

Bubble sort is a simple and well-known sorting algorithm. It is used in practice once in a blue moon and its main application is to make an introduction to the sorting algorithms. Bubble sort belongs to O(n2) sorting algorithms, which makes it quite inefficient for sorting large data volumes. Bubble sort is stable and adaptive.

Algorithm

- Compare each pair of adjacent elements from the beginning of an array and, if they are in reversed order, swap them.

- If at least one swap has been done, repeat step 1.

You can imagine that on every step big bubbles float to the surface and stay there. At the step, when no bubble moves, sorting stops. Let us see an example of sorting an array to make the idea of bubble sort clearer.

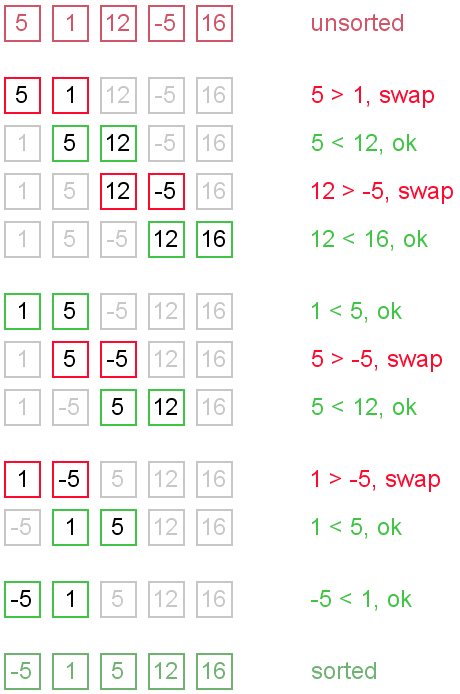

Example. Sort {5, 1, 12, -5, 16} using bubble sort.

Complexity analysis

Average and worst case complexity of bubble sort is O(n2). Also, it makes O(n2) swaps in the worst case. Bubble sort is adaptive. It means that for almost sorted array it gives O(n) estimation. Avoid implementations, which don't check if the array is already sorted on every step (any swaps made). This check is necessary, in order to preserve adaptive property.

Turtles and rabbits

One more problem of bubble sort is that its running time badly depends on the initial order of the elements. Big elements (rabbits) go up fast, while small ones (turtles) go down very slow. This problem is solved in the Cocktail sort.

Turtle example. Thought, array {2, 3, 4, 5, 1} is almost sorted, it takes O(n2) iterations to sort an array. Element {1} is a turtle.

Rabbit example. Array {6, 1, 2, 3, 4, 5} is almost sorted too, but it takes O(n) iterations to sort it. Element {6} is a rabbit. This example demonstrates adaptive property of the bubble sort.

Code snippets

There are several ways to implement the bubble sort. Notice, that "swaps" check is absolutely necessary, in order to preserve adaptive property.

Java

public void bubbleSort(int[] arr) {

boolean swapped = true;

int j = 0;

int tmp;

while (swapped) {

swapped = false;

j++;

for (int i = 0; i < arr.length - j; i++) {

if (arr[i] > arr[i + 1]) {

tmp = arr[i];

arr[i] = arr[i + 1];

arr[i + 1] = tmp;

swapped = true;

}

}

}

}

Python

def bubbleSort(L) :

swapped = True;

while swapped:

swapped = False

for i in range(len(L)-1):

if L[i]>L[i+1]:

temp = L[i]

L[i] = L[i+1]

L[i+1] = temp

swapped = True

|

摘要: 关于二分查找的原理互联网上相关的文章很多,我就不重复了,但网络的文章大部分讲述的二分查找都是其中的核心部分,是不完备的和效率其实还可以提高,如取中间索引使用开始索引加上末尾索引的和除以2,这种做法在数字的长度超过整型的范围的时候就会抛出异常,下面是我的代码,其中可能有些地方没考虑到或有什么不足 阅读全文

JNDI : Java Naming and Directory Interface (JNDI)

JNDI works in concert with other technologies in the Java Platform, Enterprise Edition (Java EE) to organize and locate components in a distributed computing environment.

翻译:JNDI 在Java平台企业级开发的分布式计算环境以组织和查找组件方式与其他技术协同工作。

Tomcat 6.0 的数据源配置

给大家我的配置方式:

1,在Tomcat中配置:

tomcat 安装目录下的conf的context.xml 的

<Context></Context>中

添加代码如下:

<Resource name="jdbc/tango"

auth="Container"

type="javax.sql.DataSource"

maxActive="20"

maxIdel="10"

maxWait="1000"

username="root"

password="root"

driverClassName="com.mysql.jdbc.Driver" url="jdbc:mysql://localhost:3306/tango"

>

</Resource>

其中:

name 表示指定的jndi名称

auth 表示认证方式,一般为Container

type 表示数据源床型,使用标准的javax.sql.DataSource

maxActive 表示连接池当中最大的数据库连接

maxIdle 表示最大的空闲连接数

maxWait 当池的数据库连接已经被占用的时候,最大等待时间

username 表示数据库用户名

password 表示数据库用户的密码

driverClassName 表示JDBC DRIVER

url 表示数据库URL地址

同时你需要把你使用的数据驱动jar包放到Tomcat的lib目录下。

如果你使用其他数据源如DBCP数据源,需要在<Resouce 标签多添加一个属性如

factory="org.apache.commons.dbcp.BasicDataSourceFactory"

当然你也要把DBCP相关jar包放在tomcat的lib目录下。

这样的好处是,以后的项目需要这些jar包,可以共享适合于项目实施阶段。

如果是个人开发阶段一个tomcat下部署多个项目,在启动时消耗时间,同时

可能不同项目用到不用数据源带来麻烦。所以有配置方法2

2在项目的中配置:

2.1 使用自己的DBCP数据源

在WebRoot下面建文件夹META-INF,里面建一个文件context.xml,

添加内容和 配置1一样

同时加上<Resouce 标签多添加一个属性如

factory="org.apache.commons.dbcp.BasicDataSourceFactory"

这样做的:可以把配置需要jar包直接放在WEB-INF的lib里面 和web容器(Tomcat)无关

总后一点:提醒大家,有个同学可能说 tomacat的有DBCP的jar包,确实tomcat把它放了

进去,你就认为不用添加DBCP数据源的jar包,也按照上面的配置,100%你要出错。

因为tomcat重新打包了相应的jar,你应该把

factory="org.apache.tomcat.dbcp.dbcp.BasicDataSourceFactory" 改为

factory="org.apache.commons.dbcp.BasicDataSourceFactory"

同时加上DBCP 所依赖的jar包(commons-dbcp.jar和commons-pool.jar)

你可以到www.apache.org 项目的commons里面找到相关的内容

2.2 使用Tomcat 自带的DBCP数据源

在WebRoot下面建文件夹META-INF,里面建一个文件context.xml,

添加相应的内容

这是可以不需要添加配置

配置1一样

factory="org.apache.tomcat.dbcp.dbcp.BasicDataSourceFactory"

也不要想添加额外的jar包

最后,不管使用哪种配置,都需要把数据库驱动jar包放在目录tomcat /lib里面

JNDI使用示例代码:

Context initContext; Context initContext;

try try  { {

Context context=new InitialContext(); Context context=new InitialContext();

DataSource ds=(DataSource) context.lookup("java:/comp/env/jdbc/tango"); DataSource ds=(DataSource) context.lookup("java:/comp/env/jdbc/tango");

// "java:/comp/env/"是固定写法,后面接的是 context.xml中的Resource中name属性的值 // "java:/comp/env/"是固定写法,后面接的是 context.xml中的Resource中name属性的值

Connection conn = ds.getConnection(); Connection conn = ds.getConnection();

Statement stmt = conn.createStatement(); Statement stmt = conn.createStatement();

ResultSet set = stmt.executeQuery("SELECT id,name,age FROM user_lzy"); ResultSet set = stmt.executeQuery("SELECT id,name,age FROM user_lzy");

while(set.next()) while(set.next()) { {

System.out.println(set.getString("name")); System.out.println(set.getString("name"));

} }

//etc. //etc.

} catch (NamingException e) } catch (NamingException e)  { {

// TODO Auto-generated catch block // TODO Auto-generated catch block

e.printStackTrace(); e.printStackTrace();

} catch (SQLException e) } catch (SQLException e)  { {

// TODO Auto-generated catch block // TODO Auto-generated catch block

e.printStackTrace(); e.printStackTrace();

} }

谢谢!

Java处理Excel数据有很多方式,如Apache的POI或JXL等.

我首先给出一个Excele数据的读入的方式(使用的是jxl.jar包)

package com.ccniit.readexcel; package com.ccniit.readexcel;

import java.io.File; import java.io.File;

import java.io.FileInputStream; import java.io.FileInputStream;

import java.io.FileNotFoundException; import java.io.FileNotFoundException;

import java.io.IOException; import java.io.IOException;

import java.io.InputStream; import java.io.InputStream;

import java.util.ArrayList; import java.util.ArrayList;

import java.util.HashMap; import java.util.HashMap;

import java.util.List; import java.util.List;

import java.util.Map; import java.util.Map;

import jxl.Sheet; import jxl.Sheet;

import jxl.Workbook; import jxl.Workbook;

import jxl.read.biff.BiffException; import jxl.read.biff.BiffException;

public class ExcelHander public class ExcelHander  { {

public static String[] getColName(String desc) public static String[] getColName(String desc)  { {

InputStream is = null; InputStream is = null;

String[] colNames = null; String[] colNames = null;

try try  { {

is = new FileInputStream(new File(desc)); is = new FileInputStream(new File(desc));

Workbook wb = Workbook.getWorkbook(is); Workbook wb = Workbook.getWorkbook(is);

Sheet sheet = wb.getSheet(0); Sheet sheet = wb.getSheet(0);

int cols = sheet.getColumns(); int cols = sheet.getColumns();

colNames = new String[cols]; colNames = new String[cols];

for (int i = 0; i < cols; i++) for (int i = 0; i < cols; i++)  { {

colNames[i] = sheet.getCell(i, 0).getContents(); colNames[i] = sheet.getCell(i, 0).getContents();

// System.out.println("列名: " + colNames[i]); // System.out.println("列名: " + colNames[i]);

} }

is.close(); is.close();

} catch (FileNotFoundException e) } catch (FileNotFoundException e)  { {

// TODO Auto-generated catch block // TODO Auto-generated catch block

e.printStackTrace(); e.printStackTrace();

} catch (BiffException e) } catch (BiffException e)  { {

// TODO Auto-generated catch block // TODO Auto-generated catch block

e.printStackTrace(); e.printStackTrace();

} catch (IOException e) } catch (IOException e)  { {

// TODO Auto-generated catch block // TODO Auto-generated catch block

e.printStackTrace(); e.printStackTrace();

} }

return colNames; return colNames;

} }

public List<Map<String, Object>> readExcel(String desc) public List<Map<String, Object>> readExcel(String desc)  { {

List<Map<String, Object>> datas = null; List<Map<String, Object>> datas = null;

try try  { {

InputStream is = new FileInputStream(new File(desc)); InputStream is = new FileInputStream(new File(desc));

Workbook wb = Workbook.getWorkbook(is); Workbook wb = Workbook.getWorkbook(is);

if(wb == null) if(wb == null) { {

return null; return null;

} }

Sheet sheet = wb.getSheet(0); Sheet sheet = wb.getSheet(0);

int cols = sheet.getColumns(); int cols = sheet.getColumns();

int rows = sheet.getRows(); int rows = sheet.getRows();

datas = new ArrayList<Map<String, Object>>(); datas = new ArrayList<Map<String, Object>>();

for (int i = 1; i < rows; i++) for (int i = 1; i < rows; i++)  { {

Map<String, Object> data = new HashMap<String, Object>(); Map<String, Object> data = new HashMap<String, Object>();

for (int j = 0; j < cols; j++) for (int j = 0; j < cols; j++)  { {

String key = sheet.getCell(j, 0).getContents(); String key = sheet.getCell(j, 0).getContents();

// System.out.println("key:" + key); // System.out.println("key:" + key);

Object value = (Object) sheet.getCell(j, i).getContents(); Object value = (Object) sheet.getCell(j, i).getContents();

// System.out.println("value:" + value.toString()); // System.out.println("value:" + value.toString());

data.put(key, value); data.put(key, value);

} }

datas.add(data); datas.add(data);

} }

is.close(); is.close();

wb.close(); wb.close();

} catch (FileNotFoundException e) } catch (FileNotFoundException e)  { {

e.printStackTrace(); e.printStackTrace();

} catch (BiffException e) } catch (BiffException e)  { {

e.printStackTrace(); e.printStackTrace();

} catch (IOException e) } catch (IOException e)  { {

e.printStackTrace(); e.printStackTrace();

} }

return datas; return datas;

} }

} }

再见了我的 2008

谨以此文献给我们的2008年--

但愿你的点点滴滴永存于我们心间....

再见我的2008--杨天宁

还有一点点的时间

让我们用来回忆

还有一点点的时间

可以用来哭泣

可以不管得到还是失去

轻轻合上眼睛

紧紧抱着它啊

时间这样飞逝

我们就这样长大

我们哭着笑着唱着

在勇敢地成长

想想身边每个朋友

你们是否不再孤单害怕

听时间开始倒记

这样随音乐大声唱起

再见我的2008年

好怀念你在我的身边

当昨天它一点点走远

我知道我们不能回到从前

再见我的2008年

我记得你的每一个瞬间

无论明天悲喜或是改变

只希望我们都能快乐一点

钟声它已走远...

2008,这一年发生了太多太多的事,或许有些事你已经淡忘,但也有些事会长存于你的心间,难以磨灭.

在2008年即将离我们而去之际,让我们回首一下自己这一年所经历过的点点滴滴.在这一年里,我们哭过,笑过,痛苦过,挣扎过,努力过,认真过,也跌到过,爬起过,遗憾过...无论你在这一年里是达到了自己的目标,实现了梦想,还是犯下了不可弥补的错误,让自己身边的人受到伤害,这一切都将过去,因为 2008年在你生命中只有一次而已,而它正逐渐离我们而去...

年初的南方大雪灾(家人因为地滑,摔伤)

手足口病肆虐

藏独事件

网络开始流行全民"中国心"头像

全球华人开展爱国行动 坚决反对西藏独立

奥运火炬传递活动遭藏独份子阻碍

512汶川大地震 无数人在此灾难中痛失亲人朋友(学校损失被迫异地复课)

08年8月8日20点 北京奥运会开幕

刘翔因腿伤退出比赛 成为全民讨论热点

毒奶粉事件 使中国奶制品业受重创

起于美国的金融风暴 席卷全球

2008,是属于中国人的奥运年.从4年前得知我们要举办奥运的那一刻起,到后来奥运圣火辗转传递,然后到奥运会顺利开幕,选手们展开精彩角逐,最后到奥运圆满闭幕,这些都在我们心中留下一个个永生难忘的精彩瞬间 那一个个运动健儿挥洒汗水,顽强拼搏的身影也铭刻于我们心底...

2008,也是中国多灾多难的一年.一个突如其来的大地震,让无数的人痛失自己挚爱的亲人,朋友,也失去了自己心爱的家园...跌倒了,不要紧,最后我们还是爬起来了.当得知这一噩耗时,我们全国同胞都纷纷伸出自己的援助之手,捐资捐物,帮灾民重建家园.这也让我们看到了中华的希望,我们传统的互助精神并没有被遗忘.一个灾难的磨练,令世人更加清楚地认识到了这个民族的力量所在.

......

对于我而言,2008也是具有里程碑式意义的一年.因为这一年我告别了自己的大学生涯,开始人生新旅程.不晓得我这个句号划得完不完美,但是我自己无悔就够了,毕竟我努力过了,就让所大学以前的记忆,所有记忆都停在这一年吧,也停留在我的内心深处...

时间推着我们向前 我们是无法停留在原地的 只有把昨天的记忆埋于心底作养料 用现有的来播种 然后期待明天能开出绚烂的花朵...

再见了,2008;再见了,我的大学生活,虽说是再见却永远不会再见,但愿我在往后十年里能有所成就,也希望09年以后,世界能继续朝着繁荣安定的方向迈进,能顺利找到工作.....

个人在项目开发中总结的。供大家参考

1.页面显示中文时出现的乱码,通常使用

1 <%@ page contentType="text/html;charset=gb2312"%>

可以达到处理乱码的效果

2.从请求中获得数据是出现的中文乱码处理方法有

(1) get请求有两种处理方法

方法1:

在tomcat的配置文件(conf)中的server.xml的

1 <Connector port="8080" protocol="HTTP/1.1"

2 connectionTimeout="20000"

3 redirectPort="8443"

4 URIEncoding="gb2312"/>

加入上面第4行代码即可。

方法2:也就是通常说的再编码的说法,

1 <% <%

2 String name=request.getParameter("name"); String name=request.getParameter("name");

3 byte []b = name.getBytes("GB2312"); byte []b = name.getBytes("GB2312");

4 name=new String(b); name=new String(b);

5 %> %>

6 也可简化为: 也可简化为:

7 <%=new String(request.getParameter().getBytes("GB2312")) <%=new String(request.getParameter().getBytes("GB2312"))

8 %> %>

(2)post请求

在jsp页面中加入也下代码

1 <%request.setCharacterEncoding("gb2312");%> <%request.setCharacterEncoding("gb2312");%>

需要注意的是这部分代码要放写在获得请求内容以前。

3.以上就是JSP页面中出现乱码的方法,最后我想说的就是如何确定发送的

请求是GET 还是POST。

(1)一般表单(Form)提交中有method方法确定。

(2)通过URL链接传递为GET方法提交

(3)通过地址重写的为GET方法提交

谢谢阅读

我是软件专业现在是大二的学生,我们已经学了1年的Java 知识,现在还没有开Java Web 我想多

提前和多学点知识,可我有点迷惑。开始应该还先学JSP方面的知识,学JSP 必须要学习HTML方面的知道,

如涉及到CSS,JS.如果想把网站设计更美观的还需要学习PS。当一切都好的时候,可能考虑学习框架,可现

在Java框架特多,我应该怎么选择,那些好啊。最后学习J2EE方面东西。我在互联网上看到,有点人说学习

J2EE可无EJB,这是真的吗??还有最后问题学了这样一切,现在流行AJAX。又要学习这个知识。我想了我

应该从哪里开始啊。如果都学一个人精力不能有那么多啊,可要是不学那开发做出来东西在外观或其他方面

没有别人好吧。希望那些高手们,帮我出个主意。。谢谢啦。。。

|